[Abstract]

Regarding consciousness, my consciousness hypothesis is clarified with IIT, and the difference is clarified. And it is reconfirmed that minimum consciousness can be accepted with mimimum information elements.

Next, with Chinese room metaphor, from minimum consciousness to typical human consciousness, it is explained that what is necessary to be add for consciousness stepwisely. And we can find that consciousness building block concept is based on this idea. And with this concept, consciousness research moving forward, and consciousness scientific modeling moving forward is hoped.

[With considering IIT (Integrated Information Theory) , confirming lower order (minimum) consciousness as well as higher order consciousness]

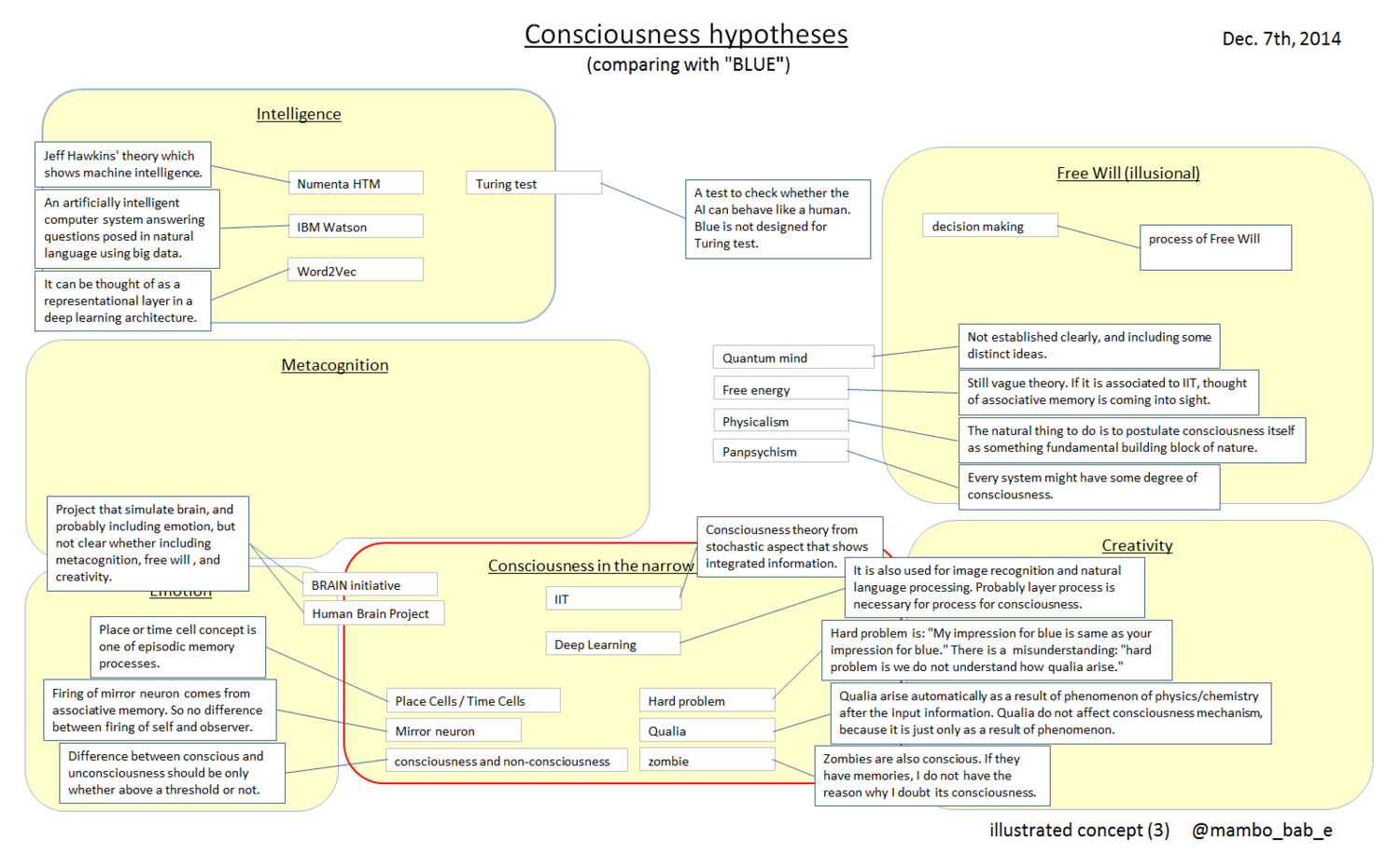

My consciousness hypothesis has very much affinity with IIT (Integrated Information Theory). Both have the trait that 'consciousness can be measured' and 'even inanimate objects can be accepted with a little information elements'.

But there are some restrictions for IIT, for example 'feedback must needed' or so. In the meantime my hypothesis delete all restrictions basically. IIT thinks cerebellum doesn't have consciousness, since it doesn't have feedback.

In the meantime my consciousness hypothesis thinks even cerebellum is considered to have consciousness, since it uses the similar elements and circuits as conscious matters. With this thought we can solve the problem of discontinuity that 'consciousness would be zero suddenly while feedback fades out', and we can solve the conflict 'the case it doesn't have consciousness even when there are qualia input'. So there are no such things that doesn't have consciousness in my consciousness hypothesis. This would induce rejection from the people who doesn't accept the consciousness of photodiode.

But IIT may help understanding it because of its certain degree of recognition. This explanation is 'My consciousness hypothesis is extended from IIT definition to minimum direction.' But please note my hypothesis model isn't the 'stone' model, but 'with feedback' model. This is to explain minimum consciousness as theory.

As you may know, IIT shows cerebellum and photodiode doesn't have consciousness as Φ=0. In the meantime considering IIT and its extension we can have this image (At this point feedback isn't shown as the restriction for consciousness):

If we will get consciousness meter with IIT,

— mambo_bab_e (@mambo_bab_e) December 7, 2014

human: 100000000

monkey: 10000000

mouse: 1000000

fish: 50000

microbe: 100 to 1000

You think so?

With consciousness meter

— mambo_bab_e (@mambo_bab_e) December 7, 2014

human: 100000000

internet: 1000000

country: 100000

school of fish: 100000

virus: 10

photodiode: 1

More interesting?

At least regarding 'consciousness with feedback' both has the same mechanism: 'each has the circuit that can judge whether the input to each is the same as memory or not'. At first with it we should be at the start point to understand consciousness science.

While extend to minimum direction, the ROM circuit which has the same circuit and information elements with feedback should have consciousness with my hypothesis, though IIT doesn't accept that has consciousness, because it doesn't have feedback actually. As similar as it, simpler ROM circuit or, even the case the object is a stone (as has a sensor) extremely, it should have consciousness with my hypothesis. Since my consciousness hypothesis accepts extending consciousness to minimum direction, many people pay attention to consciousness of a stone.

In the meantime if the theory has the restriction of feedback or other higher order consciousness, a stone (as has a sensor) doesn't have consciousness. In this case it is not enough even if the object has a sensor. At this point explanation starts from integration of information. Probably it works considering feedback as necessary condition. In the meantime how about self consciousness or counterfactual thinking?

With my hypothesis based on minimum consciousness, memories as feedback can be added to minimum consciousness, and also memories as self consciousness or counterfactual thinking can be added stepwisely.

However if the restriction of feedback or other higher order conditions are necessary, it's a little difficult to explain. For example, if feedback is necessary for start point for consciousness, it's difficult to explain why self consciousness or counterfactual thinking isn't start point. The question is why feedback can make discontinuity between consciousness and not consciousness, otherwise self consciousness or counterfactual thinking can't make discontinuity. If IIT says it's definition, we can't resist it. For considering other higher order consciousness, for example in case counterfactual thinking is the restriction for consciousness, we can't say it is conscious, though there is qualia input and feedback, can we?

[Chinese room metaphor and consciousness building block concept]

This is an old metaphor, and is used for the reason that computers can't have consciousness. Though currently consciousness researchers don't use it just as it is, some people still think it's the symbol of mystery of consciousness. For example I think system theory can explain it completely. In the meantime Chinese room's assertion would be that even though the room has information and there are input and output, that wouldn't be enough for considering human consciousness.

Actually if there is a filter which requires human level consciousness, almost all objects can't achieve the consciousness turing test. At this point the important point is a filter of human level. While using that filter for thinking whether the object has consciousness or not, the degree of difficulty is increased extremely, since higher order (human level) consciousness is required.

The next step is how we remove the filter. If you understand from beginning to here, you would find that there is a precondition that there is lower order (minimum) consciousness before considering higher order consciousness. Though few people may explain impression of lower order (minimum) consciousness, it's for example non-human, dolphins, octopuses or bees and others extending to minimum direction. And it doesn't show self consciousness or counterfactual thinking or emotion which is shown as higher order consciousness.

A few people may find that my consciousness hypothesis is that the higher order consciousness like self consciousness or counterfactual thinking is based on lower order (minimum) consciousness. And when extending to minimum direction unit qualia is needed. And speaking a little more, semantic understanding, creativity, counterfactual thinking, emotion, metacognition, self consciousness and 'illusional' free will which all are said as human characteristic are based on minimum consciousness.

And speaking a little more about Chinese room, with my hypothesis Chinese room can have easy-to-understand consciousness, based on simple Chinese room which have minimum semantic understanding and minimum consciousness.

Please see

The semantic understanding TAUTOLOGY hypothesis, which should underlie AI research. - BLUE & ORANGE blog_e

for semantic understanding and please see

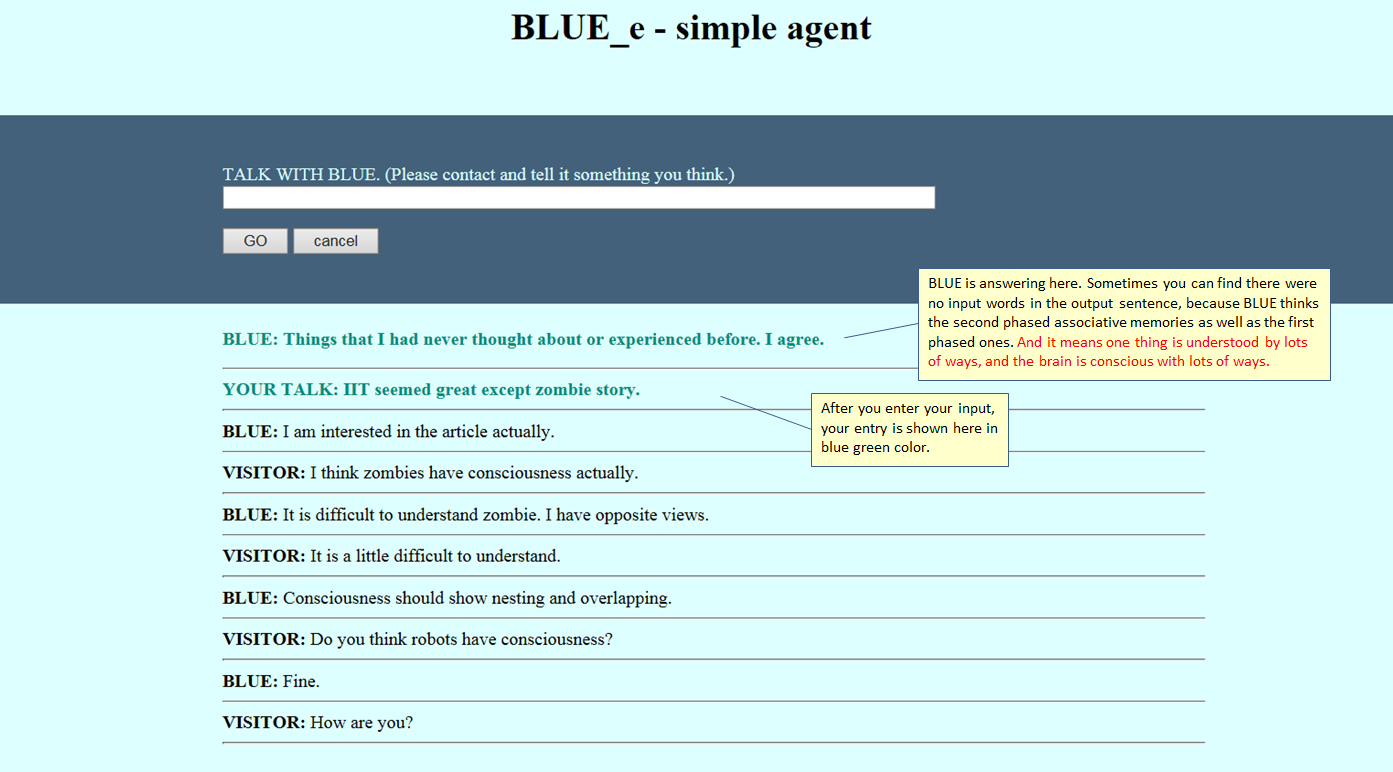

The new hypothesis of consciousness mechanism. - Consciousness toy model program was released. - BLUE & ORANGE blog_e

for consciousness hypothesis. #neuroscience

[CONSCIOUSNESS BUILDING BLOCK CONCEPT]

1. Chinese room has minimum semantic understanding and minimun consciousness.

Based on above

2. to have new memories -> feedback

3. to have associative memories -> creativity

4. to have associative memories -> counterfactual thinking

5. to have flag circuit -> metacognition

6. to collect the case information which have a part of self (It's easy to have physical body to explain the case that there is input sensing at the same time of output partly) -> self consciousness

7. to have instinctive motivation as memories -> emotion

8. to have the case information of self and other's decision making, with motivation (shown above) -> 'illusional' free will

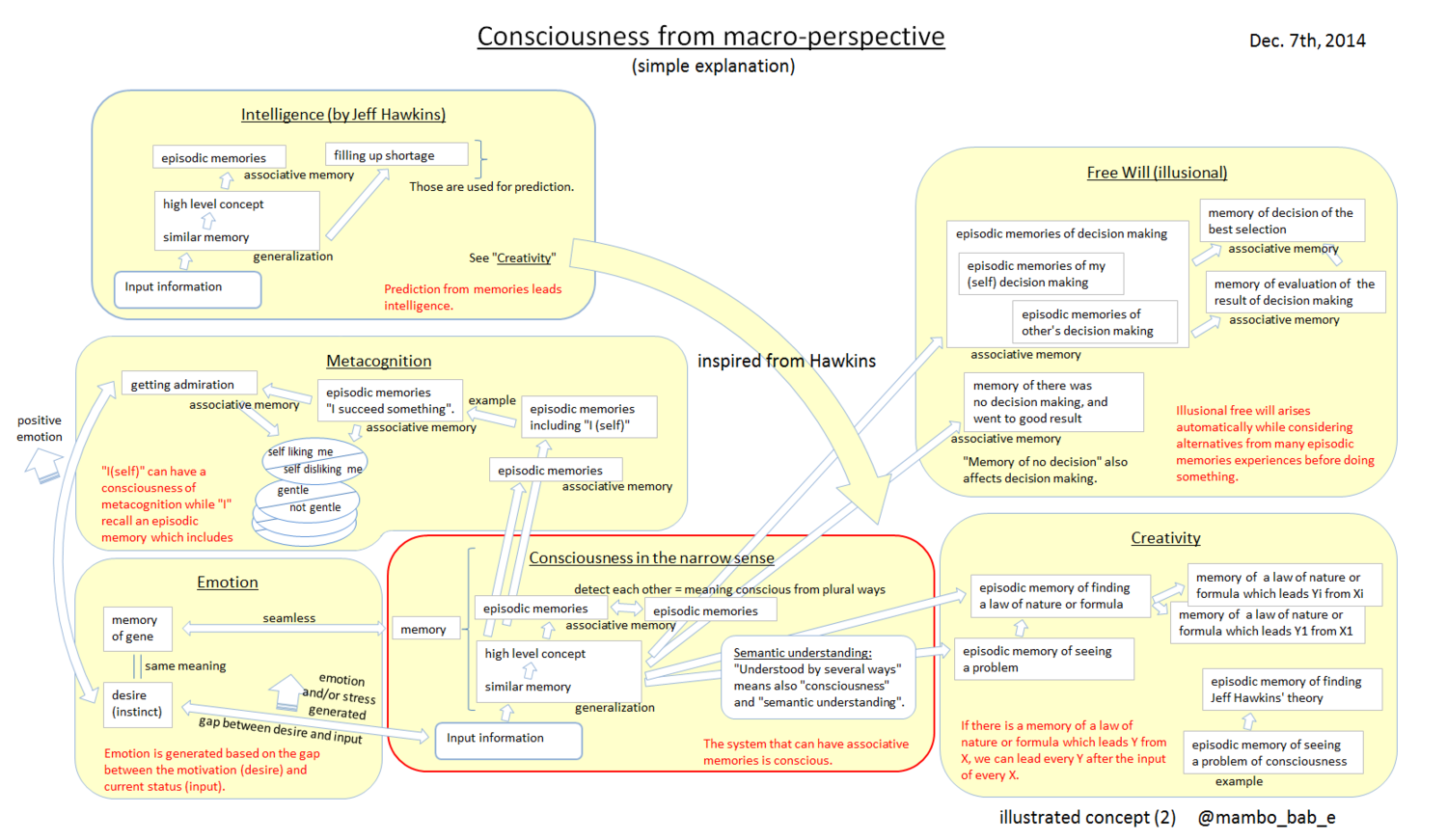

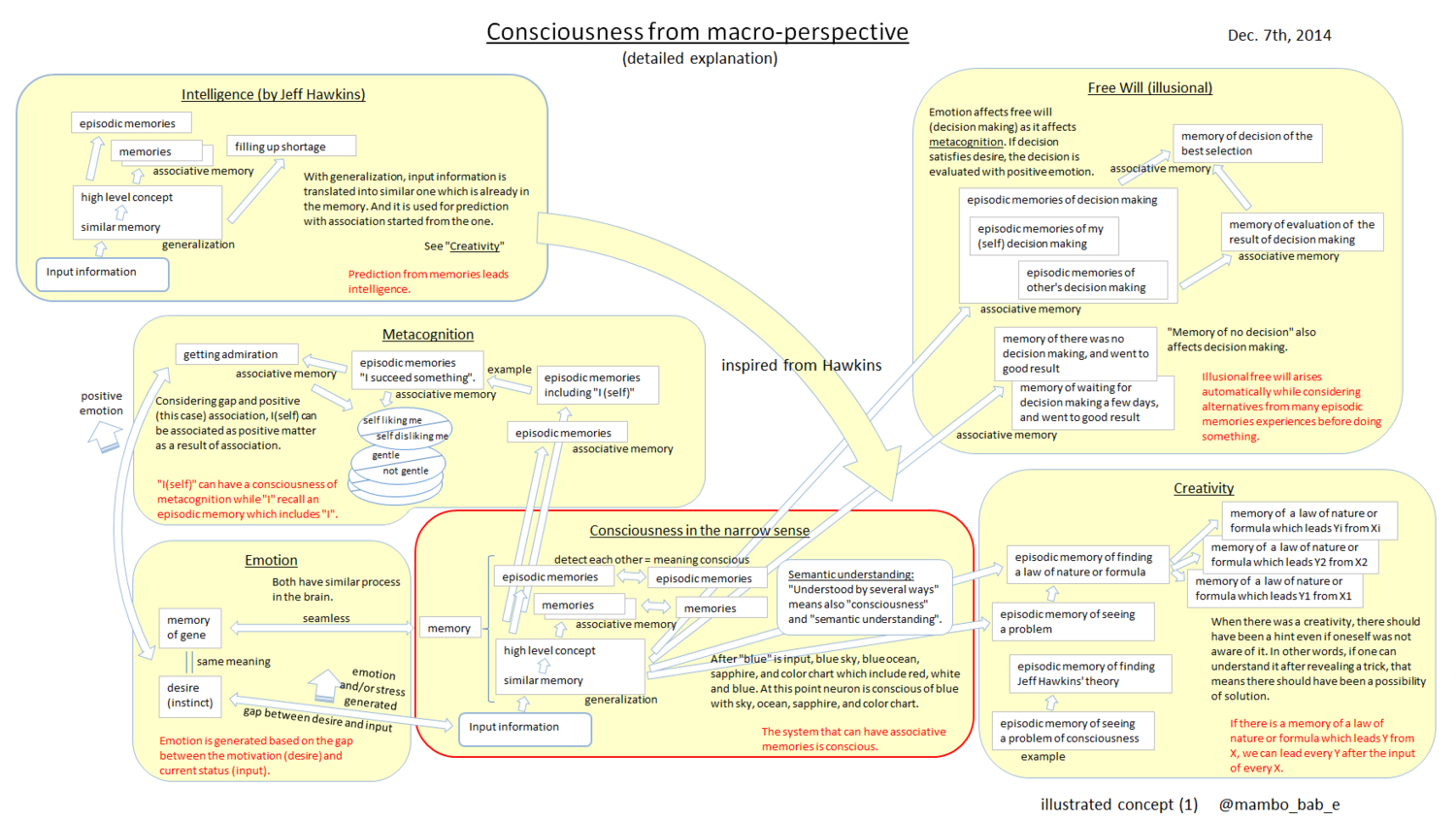

Based on No.1, each can be next. And this shows consciousness building block concept based on minimum consciousness. This is the same as 2014 my hypothesis but it's should be easier to understanding. And it would be difficult to find this if you don't find that all those neuroscience activity are based on simple minimum consciousness activities. Showing illustrated concept of consciousness mechanism. - semantic understanding, creativity, counterfactual thinking, emotion, metacognition, self consciousness and 'illusional' free will are also explained

The illustrated concept is based on the consciousness building block concept, and should be easier to understand consciousness mechanism. All those activities are based on minimum consciousness elements that isn't thought as consciousness with IIT. Minimum is the key for modeling.

The illustrated concept (2014) and the consciousness building block concept are based on the same concept. But reconstruction of elements and documentation again would induce understanding consciousness with the semantic understanding TAUTOLOGY hypothesis.

IIT researchers seems not to have a interest to consciousness model. Though modeling couldn't be found in recent papers, the reconfirmation of necessity of restrictions and importance of researching minimum direction of consciousness with modeling is hoped. After the reconfirmation of necessity of restrictions and importance of researching minimum direction of consciousness with modeling, @DeepMindAI and @demishassabis might understand the importance of researching consciousness.

My consciousness hypothesis is inspired by Numenta intelligence theory. I believe consciousness hypothesis has very much affinity with intelligence theory. But many people don't find it until now. There would be less than one in Japan that can employ me with researching consciousness. In the meantime there would be ten times worldwide. But there would be one-tenth considering qualifications or past results. But worldwide I hope consciousness research moving forward.

This entry is retouched based on the twitter on 5/5/2018 - 5/6/2018.